首先,先搞一个服务镜像,服务代码如下,非常简单(用go写的)。 app.go

package main

import (

"fmt"

"log"

"net/http"

"os"

"time"

)

func main() {

addr := ":8877"

http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

hostname, _ := os.Hostname()

fmt.Fprintf(w, "Hello, %s %s, version 2\n", time.Now(), hostname)

})

log.Fatal(http.ListenAndServe(addr, nil))

}弄一个2阶构建镜像的Dockerfile

FROM golang:1.18-alpine as builder

ENV GO111MODULE=on \

GOPROXY=https://goproxy.cn,direct \

GOOS=linux \

GOARCH=amd64

WORKDIR /go/src

ADD . .

RUN go mod tidy && go build -ldflags="-w -s" -o hello .

FROM alpine:latest

WORKDIR /root/

COPY --from=builder /go/src/hello .

# 添加执行权限

RUN chmod +x /root/hello

# 暴露端口 http-8000 jsonrpc-8001 grpc-8002

EXPOSE 8877

ENTRYPOINT "/root/hello"构建镜像 && 打镜像tag, 推送镜像到镜像仓库:

# 第二版

docker build -t gohello:v2 .

# 第三版本(其实就是把代码里的 2 改为3

docker build -t gohello:v3 .

# 打tag && 推送 版本2

docker tag gohello:v2 proaholic/gohello:v2

docker push proaholic/gohello:v2

# 打tag && 推送 版本3

docker tag gohello:v3 proaholic/gohello:v3

docker push proaholic/gohello:v3至此,镜像部分就完成了。

创建 deployment 部署文件。gohello-deployment-v2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubia

spec:

replicas: 3

selector:

matchLabels:

app: kubia

template:

metadata:

name: kubia

labels:

app: kubia

spec:

containers:

- image: proaholic/gohello:v2 # 这里先用v2版本,等下滚动更新到v3版本

name: gohello

ports:

- containerPort: 8877

---

apiVersion: v1

kind: Service

metadata:

name: kubia # 指定svc的名称

spec:

type: LoadBalancer # 负载均衡

selector:

app: kubia # 凡是 app: kuia 都是这个svc的后端 pod

ports:

- port: 80 # 这是内部集群的端口

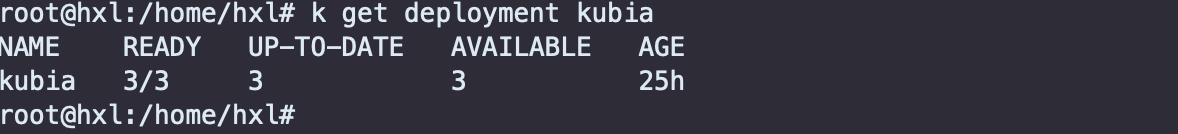

targetPort: 8877 # 这是pod实际的节点端口在k8s服务器上创建 deployment

k create -f gohello-deployment-v2.yaml

# 查看下情况

k get deployment kubia

查看下 svc 状态:

k get svc kubia

#output

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubia LoadBalancer 10.110.195.240 <pending> 80:31812/TCP 25h用curl请求测试一下:

while true; do curl http://10.110.195.240; sleep 1s; done

# 返回

Hello, 2022-12-01 11:00:44.023646891 +0000 UTC m=+656.443925111 kubia-5c896f4cf9-22mt4, version 2

Hello, 2022-12-01 11:00:45.042688407 +0000 UTC m=+659.819489348 kubia-5c896f4cf9-8mcpf, version 2

Hello, 2022-12-01 11:00:46.061770402 +0000 UTC m=+655.767289175 kubia-5c896f4cf9-mb6np, version 2

Hello, 2022-12-01 11:00:47.082285772 +0000 UTC m=+661.859086695 kubia-5c896f4cf9-8mcpf, version 2接下来,更新到v3版本

k set image deployment kubia gohello=proaholic/gohello:v3

# k8s会自动把v2版本的程序滚动更新为v3

# 再次执行

while true; do curl http://10.110.195.240; sleep 1s; done

## 返回

Hello, 2022-12-01 11:00:45.042688407 +0000 UTC m=+659.819489348 kubia-5c896f4cf9-8mcpf, version 3

Hello, 2022-12-01 11:00:46.061770402 +0000 UTC m=+655.767289175 kubia-5c896f4cf9-mb6np, version 3

Hello, 2022-12-01 11:00:47.082285772 +0000 UTC m=+661.859086695 kubia-5c896f4cf9-8mcpf, version 3如果服务有成百上千台,传统的部署方式,会把运维给累死,用上k8s就不一样了。 发布就只需要短短一两个命令。 就可以操作成千上万的服务的更新。